SUMMARY:

PostgreSQL utilizes TOAST (The Oversized-Attribute Storage Technique) to efficiently store large column values, though this mechanism can trigger performance-degrading disk spills during complex queries if not properly managed.

- TOAST automatically compresses and relocates oversized data types, such as JSONB or TEXT, into separate storage tables to keep main table pages lean and efficient.

- Operations that require reassembling these large values, such as sorting, hashing, or aggregations, often exceed allocated memory (

work_mem) and trigger the creation of temporary files on the disk. - Database administrators can mitigate these spikes by avoiding direct sorts on TOASTed columns, cautiously increasing memory limits for specific sessions, or utilizing external storage options.

By mastering these storage behaviors, organizations can ensure that queries involving massive datasets remain performant without exhausting system resources.

Table of contents

PostgreSQL is renowned for its reliability and efficiency in handling large datasets. One of its lesser-known yet crucial features is TOAST (The Oversized-Attribute Storage Technique). TOAST allows PostgreSQL to store massive column values without bloating the main table. While this feature is extremely useful, it can sometimes contribute to high temporary disk usage during certain queries and operations. In this blog, we’ll explore TOAST tables, how they work, and how they impact temp usage, with practical examples.

Introduction

PostgreSQL is renowned for its reliability and efficiency in handling large datasets. One of its lesser-known yet crucial features is TOAST (The Oversized-Attribute Storage Technique). TOAST allows PostgreSQL to store massive column values without bloating the main table. While this feature is extremely useful, it can sometimes contribute to high temporary disk usage during certain queries and operations. In this blog, we’ll explore TOAST tables, how they work, and how they impact temp usage, with practical examples.

What Are TOAST Tables?

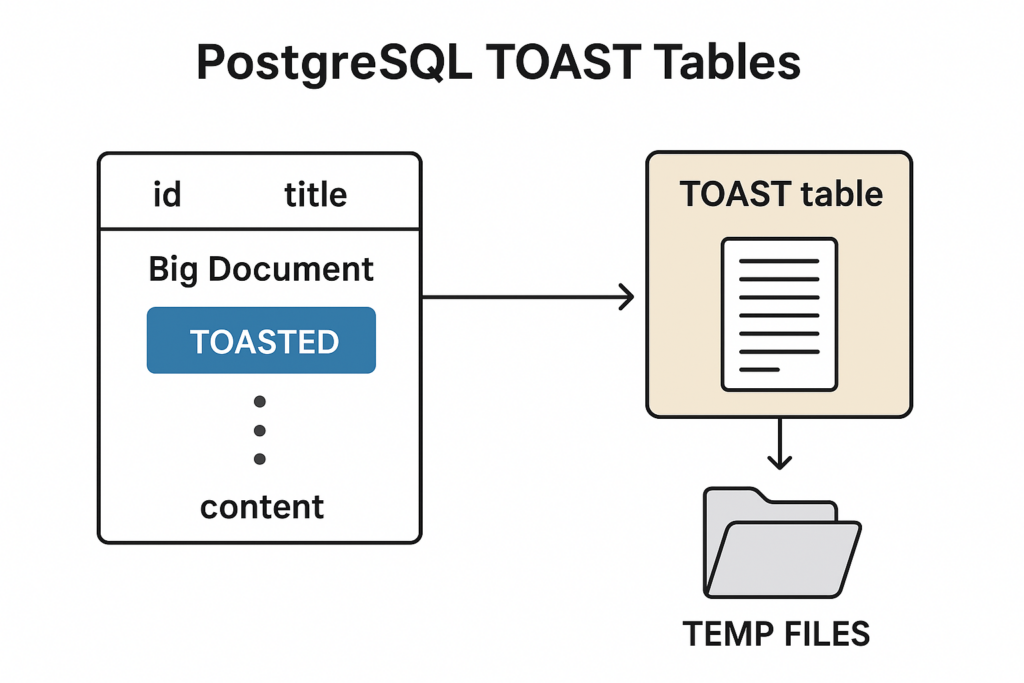

PostgreSQL stores table data in pages of roughly 8 KB. When a row contains large column values such as TEXT, BYTEA, JSONB, or XML, it may span multiple pages. PostgreSQL handles this by:

- Compressing the data if possible.

- Moving the oversized column to a TOAST table, leaving only a pointer in the main table.

Key characteristics of TOAST tables:

- Automatically created and managed for large columns.

- Store data in a compressed, chunked format for efficient retrieval.

- Fully transactional; updates, inserts, and deletes follow ACID rules.

Example: How TOAST is Triggered

To demonstrate TOAST clearly, consider this setup:

DROP TABLE IF EXISTS documents;

CREATE TABLE documents (

id serial PRIMARY KEY,

content text

);

DO $$

DECLARE

bigtext text;

BEGIN

-- Insert ten rows of roughly fifty megabytes each

FOR i IN 1..10 LOOP

bigtext := (

SELECT string_agg(md5(random()::text), '')

FROM generate_series(1, 500000) -- Generates ~50 MB of *uncompressible* text

);

INSERT INTO documents(content) VALUES (bigtext);

END LOOP;

END

$$;This example inserts 10 rows, each containing approximately 50 megabytes of random MD5 strings. Since this text is highly uncompressible, PostgreSQL is forced to store it entirely in the TOAST table in chunked form. You can verify the TOAST table with:

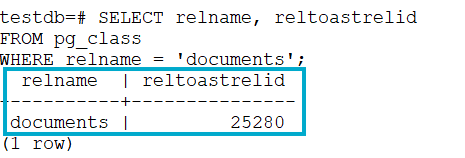

SELECT relname, reltoastrelid

FROM pg_class

WHERE relname = 'documents';Sample output:

If reltoastrelid is nonzero, TOAST storage is being used.

How TOASTed Values Increase Temporary Disk Usage

TOAST reduces table bloat, but queries that operate on TOASTed columns often require PostgreSQL to fetch and reassemble the large values. When the amount of data exceeds work_mem, PostgreSQL spills to disk and creates temporary files.

You will see temp usage increase during:

- Sorting large values.

- Distinct operations on large TEXT or BYTEA columns.

- Joins that involve TOASTed columns.

- Aggregations such as STRING_AGG or JSONB_AGG.

- Index creation on large TOASTed columns.

You can monitor this activity through:

SELECT datname, temp_files, temp_bytes

FROM pg_stat_database;Demonstration: TOAST Causing a Temporary Disk Spike

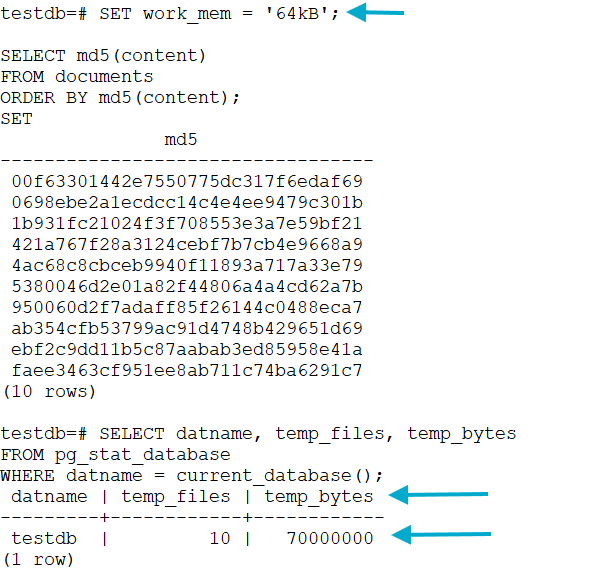

In the same session, reduce work_mem drastically to force PostgreSQL to spill to disk:

SET work_mem = '64kB';Now execute a sort that touches the TOASTed content:

SELECT md5(content)

FROM documents

ORDER BY md5(content);Because content is nearly fifty megabytes per row and stored in TOAST chunks, PostgreSQL must fetch and process large amounts of data. Since the memory limit is just 64 kilobytes, the sort cannot fit in RAM, and PostgreSQL writes temporary files to disk.

You can verify the temp usage immediately afterward:

SELECT datname, temp_files, temp_bytes

FROM pg_stat_database

WHERE datname = current_database();Sample Output:

This example clearly shows how TOASTed data triggers the creation of temporary files, especially during operations such as sorting.

Best Practices to Manage Temp Usage with TOAST

- Increase work_mem cautiously for sessions that require sorting large values.

- Avoid sorting or grouping directly on TOASTed columns.

- Use STORAGE EXTERNAL or MAIN depending on your compression needs.

- Store large objects externally if they do not compress well.

- Split extremely large TEXT or JSON into multiple columns when possible.

- Use staging tables for transformation steps that repeatedly scan large values.

Conclusion

TOAST is an essential part of PostgreSQL and allows large values to be stored efficiently. However, queries that require processing TOASTed data can lead to significant temporary disk usage, especially when memory settings are low. Understanding how TOAST works and applying the right strategies helps ensure stable performance even with very large datasets.

Please contact us for any questions.