SUMMARY:

Modern computing professionals must clearly distinguish between the physical processor socket, the independent execution core, and the dependent thread to accurately calculate a system’s true processing capacity in environments dominated by parallel workloads.

- Processor sockets are the most tangible components, representing the physical receptacle or package connecting the CPU to the motherboard, a architecture that dates back to the Y2K era.

- Processor cores are independent execution units within a package, becoming the most critical processor metric for planning systems with parallel workloads after processor clock speeds plateaued around 2005.

- Processor threads are “logical processors” enabled by techniques such as simultaneous multithreading (SMT) or Intel’s Hyper-Threading Technology, which efficiently boost performance by providing a second set of registers to avoid slow context switches.

Accurately assessing the total number of CPUs requires multiplying the system’s sockets by the cores per socket and the threads per core, information readily available using operating system tools like lscpu on Linux or the Task Manager on Windows.

Table of contents

What do you mean, exactly, by “CPU”?

Once upon a time, computers sat on our desks; we knew exactly how many processors they had; and back then our biggest uncertainty was whether they could handle Y2K. Fast forward to today: our computers have 4, 8 or 16 processors — or quite possibly all of those at the same time, depending on how you define “processor.” (I’m using the terms “CPU” and “processor” interchangeably.) In this post I’ll describe “processor sockets,” “processor cores,” and “processor threads” with the goal of being able to answer “just how many CPUs do I have here?”

Most of us can readily rattle off the three words behind the initialism “CPU”: “central processing unit!” With today’s complex processors, however, we’re now faced with having to clarify whether the unit we speak of is

- a hardware package (a “socket”),

- an independent execution unit (a “core”) within that package; or

- a largely dependent unit (a “thread”) within that core that is more logical abstraction than anything else.

Why do we now have this complexity? And, how did we get here? A brief look at each of these concepts should put it all in context.

Processor Sockets

This is the oldest of the three concepts, and the easiest to understand. A CPU socket is a receptacle, a physical connector linking the processor to the computer’s motherboard (the primary circuit board for the computer). Most PCs of the Y2K era (the 1990s) had just one CPU (and hence just one CPU socket). If you wanted another CPU then you needed a motherboard with another CPU socket. Two-socket PC motherboards first appeared around 1995. Multiple-socket motherboards were quite expensive and thus tended to be limited to higher-end servers.

You also needed an OS capable of making use of the additional processors, one with SMP* support: Windows 98 wouldn’t work (but Windows NT could) and if you were using Linux back then you’d probably need to recompile your kernel.

The number of CPU sockets on your motherboard is, of course, the same as the number of discrete CPU packages in your system (assuming you didn’t leave any sockets empty). By extension, the term “processor sockets” can refer to these discrete CPU packages themselves — handy when needing to distinguish this sense from the other two processor senses. A processor in the socket-sense is quite tangible: it’s something you can buy. (You can’t buy a core by itself — although you could rent one in the cloud!)

Processor Cores

Around 2005 both AMD and Intel started taking multiple processors and putting them in the same package: think of this as two CPUs sharing the same socket. There are some technical advantages to doing so (e.g. faster cache coherency as it’s not limited by the motherboard) along with the manufacturing advantages (the cores are usually, although not always, manufactured in the same die). Processor manufacturers turned to this model (more cores rather than faster cores) after being unable to increase clock speeds significantly after reaching the GHz range. Each processor core can execute code independently (whatever the layout: whether the cores are all on the same die, sharing the same processor socket, or each core has its own processor socket), although there are slight variances due to shared caches and bus layout.

Total core count is today the most important processor metric when planning systems with independent parallel workloads (such as those of today’s servers), taking the primary place CPU clock frequency held for comparing processors two decades ago.

Processor Threads

Processor threads are “logical processors”: the CPU reports more processors than physically exist.

In 2002 Intel Corporation introduced the “Xeon MP” and “Pentium 4 HT” processors – the first processors with what Intel called “Hyper-Threading Technology.” Sill commonly called “hyperthreading” (the Kleenex of the processor world; the generic term is “simultaneous multithreading” or “SMT”), it was an attempt to speed computer processing by having another thread ready to run – and for the most part, it worked. I say “for the most part” because its advantage turned out to be highly workload dependent, speeding some workloads up dramatically and having a negative effect on others, slowing them down considerably. Many guides recommended disabling hyperthreading in the BIOS – particularly early on before software became hyperthreading-aware. Overall, however, hyperthreading has been a net gain, boosting performance (some say by 15%) for generic workloads, which why we still commonly see it on new processors today.

Hyperthreading is a hardware optimization to an old problem. Processors are often data-starved, waiting on I/O requests from slower storage. Operating systems solved this problem by giving the processor a different program or thread when the current one runs out of work: this is called a context-switch. The problem with context switches is that they are slow: the current state of the thread has to be copied from the CPU registers (super-fast memory locations within the CPU) back to main memory (RAM – very slow by comparison) and the new thread’s state has to then be copied into those registers. This process takes a few microseconds — a few thousand CPU cycles.

Hyperthreading solved this problem by having a second set of these super-fast registers already loaded and ready to go. This second set of registers, which only increases the processor die size by 5%, is presented to the computer as a second CPU, utilizing the same SMP architecture designed for multiple-processors. Hyperthreading doesn’t double your performance because it doesn’t give you a second physical processing unit: instead you just get a second set of CPU registers masquerading as a second CPU.

There are many architecture-specific nuances when it comes to processor threads and their degree of autonomy. All implementations, however, have this in common: threads are dependent (at least partially if not entirely) on resources shared with other threads on the same core.

In summary:

- processor socket — a package of processors sharing a physical connection to the motherboard

- processor core — an independent processor

- processor thread — a “logical processor” sharing resources with other threads on the same core

The 4/8/16-processor computer in this post’s opening paragraph is a four-socket server with dual-core CPUs in each socket, each core having two threads. It has:

- 4 processor sockets,

- 8 total processor cores, or

- 16 total processor threads.

So how many CPUs do I have?

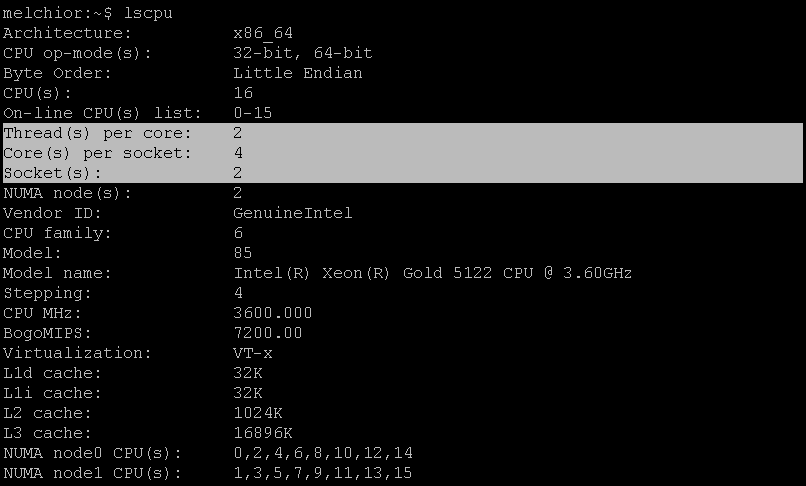

You can see the number of sockets, cores, and threads on a Linux server via the “lscpu” command and focusing on the “Thread(s) per core,” “Core(s) per socket” and “Socket(s)” lines:

The total number of processor threads can be calculated by multiplying the number of sockets by the number of cores per socket and the number of threads per core.

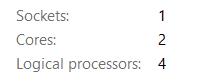

On Windows 10 you can see the breakdown under the Performance tab of the Task Manager (Ctrl-Shift-Esc to launch Task Manager; click “More details” if you don’t see tabs):

Note that, on Windows, processor threads are called “Logical processors” (arguably a better name). Task Manager shows total Cores (rather than cores-per-socket) and total threads (total Logical processors, rather than threads-per-core).