Indexes are Good for Performance

Generally speaking, DBAs and developers spend a fair amount of their performance time trying to find which indexes to add to a database to improve performance. If a specific query is having performance problems, one of the strongest tools in our box is indexing.

Indexes are Bad for Performance

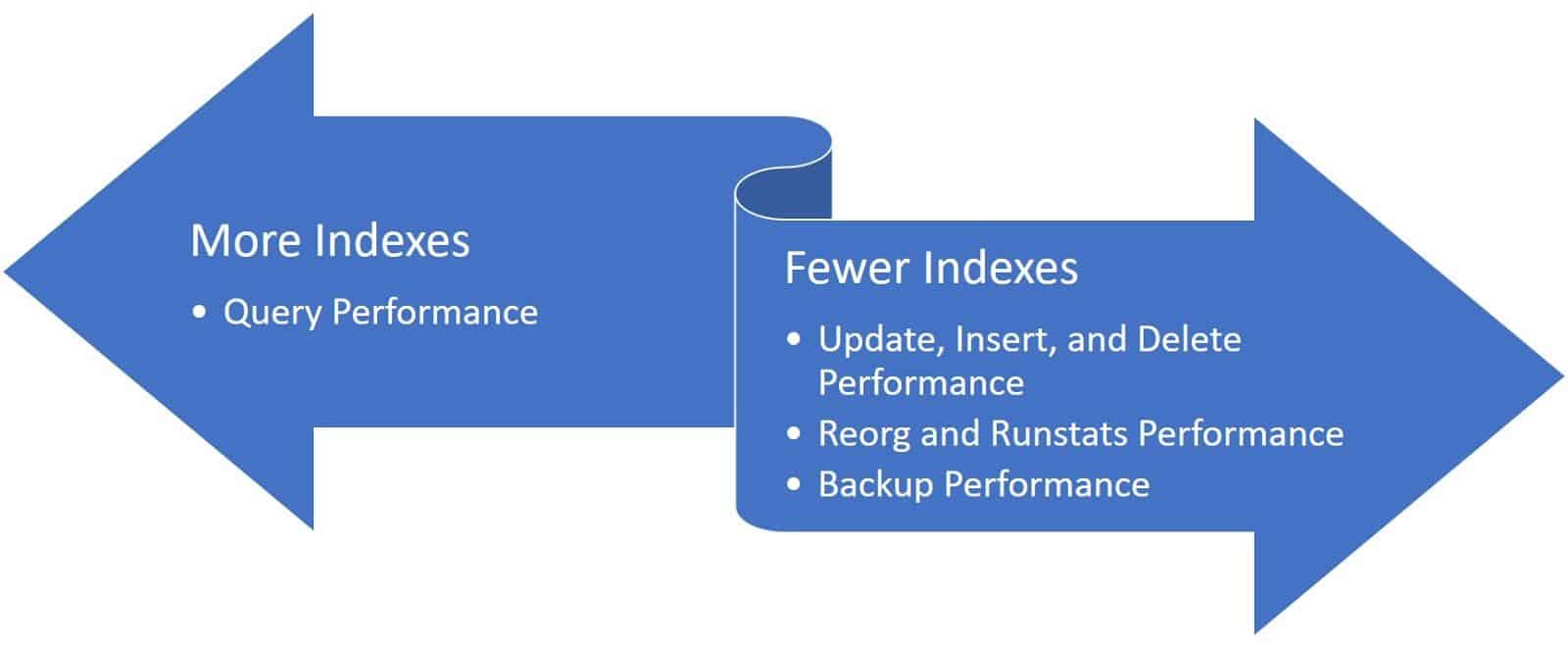

Indexes aren’t all good, though. Putting aside the fact that some indexes, particularly low-cardinality indexes, can actually hurt performance, indexes are physically stored on disk. This means that not only do they take up disk space, but they also hurt the performance of operations that insert, update, or delete data. Each additional index represents one more place on disk Db2 has to go insert, update, or delete the same data that is stored in the table. Indexes also have to be maintained through reorgs and runstats and backed up with the rest of the database.

This means that you must decide on your priorities for performance when deciding how many indexes to add. These priorities may be different from table to table within the same database. It is often helpful to categorize tables in your data model to understand their performance priorities.

Many Db2 consultants have horror stories of going into a client and finding 30 or more indexes on a table that is critical to OLTP performance. At least one consultant I know claims to have put his daughters through college and paid for their weddings by dropping indexes.

There is no magic number for the correct number of indexes on a table. For Db2, often a good index that helps multiple queries is better than a perfect index for every query. Often databases on the analytics/BI/Data Warehouse end of the spectrum are likely to have more indexes than those on the OLTP/transaction processing/e-commerce end of the spectrum. This is because the priority in analytics databases is queries, while the priority in transaction processing databases is often the small singleton-row updates, inserts, and deletes that additional indexes can slow down.

Identifying Unused Indexes

With Db2 9.7, IBM introduced a column in several system views, including SYSCAT.INDEXES, that records the last time an object was used. This column, called LASTUSED, is not updated immediately upon usage of an object to prevent performance impact but is generally accurate. There were some issues with early fix packs of 9.7, so the numbers should not really be considered accurate if you’re running older code. However, on later fix packs of 9.7 and the later versions, this information is a very valuable tool.

My favorite query for finding unused indexes is:

select i.lastused,

substr(t.tabschema,1,20) as tabschema,

substr(t.tabname,1,30) as tabname,

substr(i.indschema,1,20) as indschema,

substr(indname,1,40) as indname,

substr(colnames,1,60) as colnames,

bigint(fullkeycard)as fullkeycard,

bigint(card) as table_card,

case

when card > 0 then decimal(float(fullkeycard)/float(card),5,2)

else -1

end as pct_card,

mi.index_scans,

mt.table_scans,

mi.index_only_scans,

mi.page_allocations,

volatile

from syscat.indexes i join syscat.tables t

on i.tabname=t.tabname and i.tabschema=t.tabschema

join table(mon_get_index('','',-2)) as mi on i.iid=mi.iid and i.tabschema=mi.tabschema and i.tabname = mi.tabname

join table(mon_get_table('','',-2)) as mt on i.tabschema=mt.tabschema and i.tabname=mt.tabname

where

indextype not in ('BLOK', 'DIM')

and t.tabschema not like 'SYS%'

and uniquerule='D'

and not exists (select 1

from syscat.references r join syscat.keycoluse k

on r.tabschema=k.tabschema and r.tabname=k.tabname

where t.tabschema=r.tabschema

and r.tabname = t.tabname

and k.colname in ( select colname

from syscat.indexcoluse as ic

where ic.indschema=i.indschema

and ic.indname=i.indname))

and i.lastused < current timestamp - 30 days

order by mi.index_scans, i.lastused, fullkeycard, card

with ur;

Sample output:

LASTUSED TABSCHEMA TABNAME INDSCHEMA INDNAME COLNAMES FULLKEYCARD TABLE_CARD PCT_CARD INDEX_SCANS TABLE_SCANS INDEX_ONLY_SCANS PAGE_ALLOCATIONS VOLATILE

---------- -------------------- ------------------------------ -------------------- ---------------------------------------- ------------------------------------------------------------ -------------------- -------------------- --------------- -------------------- -------------------- -------------------- -------------------- --------

01/01/0001 DBA LOCK_EVENT DBA LOCK_EVENT_IND1 +XMLID -1 -1 -1.00 0 1 0 43

01/01/0001 DBA LOCK_ACTIVITY_VALUES DBA LOCK_ACTIVITY_VALUES_IND1 +XMLID+PARTICIPANT_NO+ACTIVITY_ID+UOW_ID -1 -1 -1.00 0 1 0 1

01/01/0001 WSCOMUSR GRGFTREG WSCOMUSR GRG_CONSTRAINT1 +STOREID 1 29509 0.00 0 3738 0 0

01/01/0001 WSCOMUSR GRADDR WSCOMUSR GRADDR_INDEX2 +LASTNAME+FIRSTNAME+STATE 10030 31774 0.31 0 0 0 1

01/01/0001 WSCOMUSR GRGFTREG WSCOMUSR GR_INDEX2 +POSTEVENTADDRESS_ID 29509 29509 1.00 0 3738 0 0

01/01/0001 WSCOMUSR GRGFTREG WSCOMUSR GR_INDEX3 +PREEVENTADDRESS_ID 29509 29509 1.00 0 3738 0 0

6 record(s) selected.This query attempts to find indexes that are not required for unique constraints, foreign keys, or block or dimension indexes for MDC tables. It looks for indexes that have not been used in the last 30 days. Consider if your database might have other requirements to consider. Some indexes may only be used at month-end, quarter-end, year-end, or during some special annual period like Black Friday.

Backout Planning

Everything you do in a Db2 environment should have a back out plan. Before dropping an index, make sure you have the syntax to recreate it. db2look is a great tool for this. Be sure to retain this syntax for at least a few months in case the index ends up being something that was needed for a periodic process several months down the line. Re-creating an index if performance problems are encountered is nearly always quick and easy.

Verifying Impact

To understand the impact of a dropped index, it is best to get a baseline in a load testing environment, then drop the index and repeat the load test to see what, if any difference is made. The criteria for success is lack of degradation. You may not see improvement for each index, but you are still saving disk space and other resources.

If you don’t have a load-test environment, it is unlikely that basic functional testing will help, but it can’t hurt, so still do the drop in a non-production environment first. If possible, take a baseline before the drop and compare to after the drop to see if there are any significant differences.

For a truly unused index, you may not see a positive impact, but it is still beneficial to remove it.

Summary

Removing indexes can help performance or help prevent performance degradation, particularly for OLTP environments. It can free up disk space both for the database and backups and make backups and table maintenance run faster. Contact the expert XTIVIA Db2 DBAs today to discuss a project to identify and carefully remove unused indexes.