When creating an S3 Replication Rule, objects that were uploaded prior to the rule being created will not be replicated. These will need to be replicated manually with an S3 Batch Operation. This can also be used for occasional one-off replications if needed.

Step-By-Step Guide

1. Browse to the S3 page and choose Batch Operations from the left menu.

2. On the Batch Operations page, click Create a job to create a new batch job.

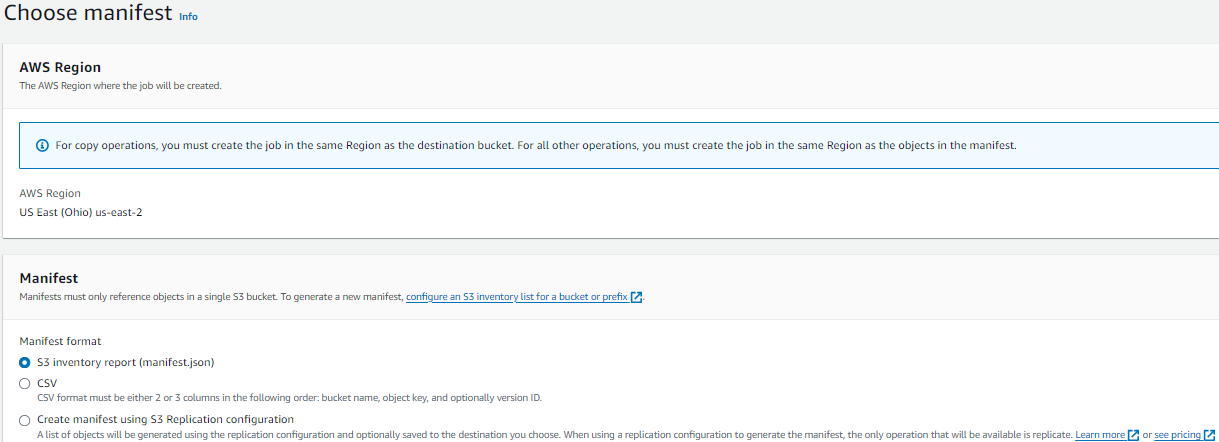

3. On the Choose Manifest page, you can choose the type of manifest (file with a list of objects to replicate or copy) to use. AWS uses manifest files to determine which objects from the source bucket(s) need to be replicated\copied to the destination bucket(s).

There are 3 options for manifest files.

- S3 inventory report (manifest.json) – This is an AWS inventory report that can be created by going to the source bucket -> Management -> Inventory configurations. Choose Create inventory configuration and follow the steps to have AWS create a manifests.json file that can be used here.

- CSV – You can choose to create your own file to include multiple source buckets and objects. The minimum required file has two columns without headers (bucket name and object key). A third column for Version ID can be added if needed.

- Sample:

Examplebucket,objectkey1,PZ9ibn9D5lP6p298B7S9_ceqx1n5EJ0pExamplebucket,objectkey2,YY_ouuAJByNW1LRBfFMfxMge7XQWxMBFExamplebucket,objectkey3,jbo9_jhdPEyB4RrmOxWS0kU0EoNrU_oIExamplebucket,photos/jpgs/objectkey4,6EqlikJJxLTsHsnbZbSRffn24_eh5Ny4Examplebucket,photos/jpgs/newjersey/objectkey5,imHf3FAiRsvBW_EHB8GOu.NHunHExamplebucket,object%20key%20with%20spaces,9HkPvDaZY5MVbMhn6TMn1YTb5A

- Sample:

- Create manifest using S3 Replication configuration – If you already set up a replication rule, this is the easiest option for copying existing objects using the same rules as the replication.

To move data between buckets, there are 2 basic batch operations: copy and replicate. Both work the same but have different requirements.

- Copy – Allows for only 1 destination bucket. The batch operation must be created in the same region as the destination bucket. Copy can be used for all 3 types of Manifests. There are several options that can be used with Copy.

- Replicate – Allows for multiple destination buckets. The batch operation must be created in the same region as the destination bucket. Replicate can be used for all 3 types of Manifests, but if using CSV, the file must include the object version IDs. There are no additional options when using Replicate.

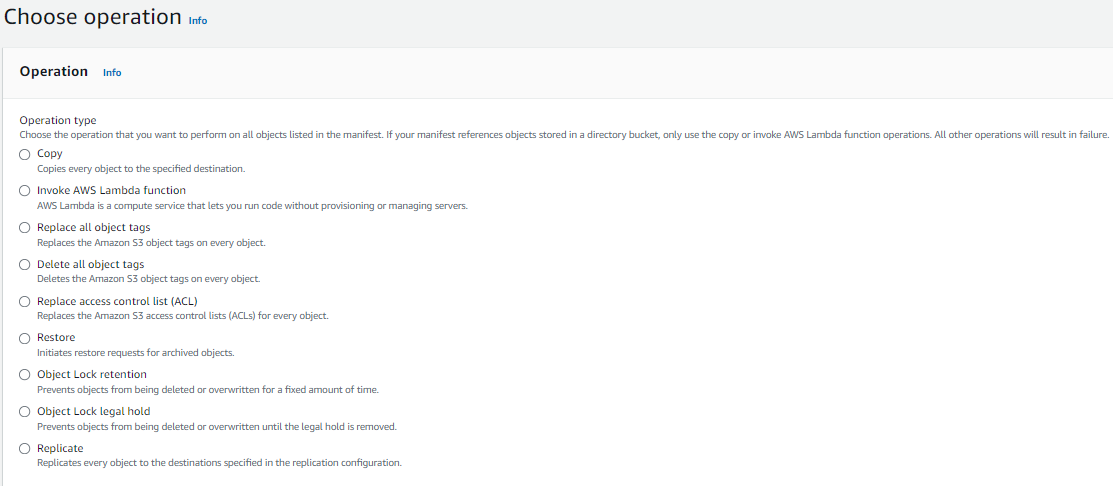

4. After choosing your manifest options, you will need to pick the appropriate operation. For this article, you will use either Copy or Replicate.

- If choosing Replicate, AWS will use the information in the manifest file to replicate the appropriate objects to the destination bucket(s).

- If choosing Copy there are several options to customize the process.

- Copy destination – The destination bucket for all objects to be replicated. If you need multiple destination buckets, choose Replicate instead.

- Storage class – Set the storage class of the objects after they are copied into the destination bucket.

- Server-side encryption – Use this to set a specific encryption policy if you do not want to use the default setup in the destination bucket.

- Additional checksums – AWS uses checksums to verify the integrity of the data being copied, ensuring it is not changed and no errors occur. You can choose to replace the default with another option that AWS provides.

- Object tags – If the objects being copied have tags assigned, you can choose what to do with those tags. Whatever option you choose will happen for all tags on all objects. Copy them, replace them with new tag(s) or do not copy any tags.

- Metadata – Similar to object tags, you can choose whether to copy, replace, or not copy the metadata for objects. Certain metadata is added by AWS to every object, and additional user-controlled metadata can be added, but only when an object is uploaded. The only way to update metadata is by using a copy operation.

- Access control list (ACL) – By default, the objects in the destination bucket will grant permissions to the source bucket account. You can choose to disable this if your account does not need access to those objects. Similarly, you can choose to grant access to other accounts or make the object publicly accessible.

- Object Lock legal hold – Use this to lock the objects in the destination bucket from being deleted until the hold is removed. This hold does allow users with the appropriate permissions to still delete the file (S3:DeleteObject) or turn the hold off (“S3:PutObjectLegalHold). To add extra security, it is best to limit the permissions users have and/or explicitly deny delete permissions to objects with a hold on them via ACLs. Object Lock must already be turned on in the destination bucket to use this option.

- Object Lock retention – Similar to legal hold, this option locks the deletion of objects until a specified date. Once the date has passed, objects can be deleted by anyone with permission. Object Lock must already be turned on in the destination bucket to use this option.

- Click Next and choose to configure additional options.

- Description – A short description of the batch job. All batch jobs will show up under the Batch Operations menu in S3.

- Priority – If setting up multiple batch jobs, you can set the priority level to ensure particular jobs are processed first.

- Completion report – You can choose to have a report generated at the end of the back job. This can include either all tasks or just those that failed. It is a good idea to have the failed tasks reported so you can troubleshoot any issues. You can choose an S3 bucket in the source account to save the report.

- Permissions – The batch job uses an IAM role that must have the proper permissions to complete all the steps chosen in the options. It will need permissions to both the source and destination buckets and objects. If using tags or adding holds, the role must also have those permissions. AWS provides a full guide to what permissions are needed.

- Job tags – Each batch job can have multiple tags associated with it, just like objects. These tags can help monitor what user or department is managing the job or to use when creating IAM policies to restrict user permissions to jobs with certain tags.

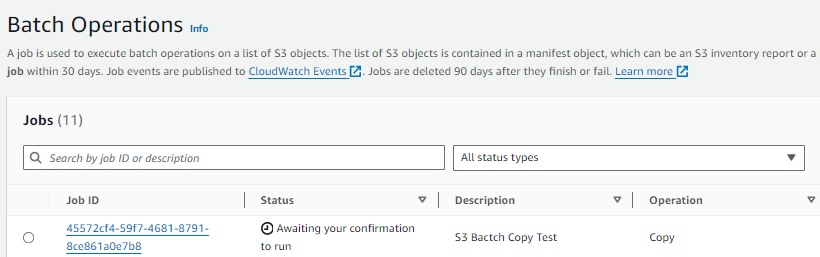

- Click Next and review all your settings. Once verified, click Create job. You will be taken to the Batch Operations page, where you can monitor all batch jobs.

- When your job is ready to run, the Status will change to “Awaiting your confirmation to run.”

- Click on the Job ID link to open the job.

- On the top right, click on Run job.

- The run job page will load. Scroll down and click on Run job.

- Once the job is done, it will show the Status of either Completed or Failed.

- If status is Failed, the reason will show under Reason for termination. The most common issue is a lack of permissions; a full list can be found in the AWS documentation.

- If status is Completed (and you chose to create a report), the link will show at the bottom of the page under Complete report. The report will contain a list of objects, status, and reasons for any failures. A list of all errors can be found here.

- Once the job is done running, it can be cloned to create another batch operation by clicking on the Clone job at the top right of the Job page. This is especially useful when a job ends in failure, and after troubleshooting, the job can be created again with a few clicks. It is not possible to run the same job multiple times. If you need to run it again, it must be cloned into a new job.

Conclusion

Using AWS S3 Batch Operations provides a powerful and flexible solution for manually replicating objects that were uploaded prior to the creation of a replication rule. By following the steps outlined in this blog, you can efficiently manage the replication process and ensure your data is consistent across your buckets. Whether you need to replicate a large number of objects or handle occasional one-off replications, batch operations offer the tools you need to streamline these tasks. With proper setup and execution, you can maintain the integrity and accessibility of your S3 data, meeting your organizational requirements effectively.

For any questions, please contact us.